I went through the Llama-2 white paper that was released with the model by meta. I was hoping to learn some special technique they may be using to train their models. Apparently, there isn't any. The learning process is straightforward. What is different is the huge costs associated with fine tuning after the model is trained. This fine tuning requires human interaction and feedback. To incorporate the feedback, the model has to be altered that requires more computation. Training, fine tuning the model costs more than $20M (~$4 per hour and 5M hours). This immediately limits the number of players who will actively develop LLMs. The cost of adding safety to these models (e.g. block prompts for ransom letters etc.) is almost as high as cost of training the model.

Another interesting tidbit from the paper was the assertion that RoCE based 200 Gbps interconnected cluster was adequate and more economical than Inifiband based cluster. RoCE uses commodity ethernet. If one can train 70B parameter model with trillions of tokens using commodity ethernet with RDMA, what is the compelling need to move to expensive NVLink linked superchips based systems? May be they are overfitting? (pun intended)

There is a significant cost to building these models that are shared with public (unknowingly) i.e. they are climate related. The carbon emission of these clusters is shown in the paper at 539 tonnes of CO2e. It took 3.3M hours of GPU (A100-80G). All of this to chat with a bot?

I found more benchmarks and metrics related to safety, climate and other social concerns in the paper than what one finds a technical paper.

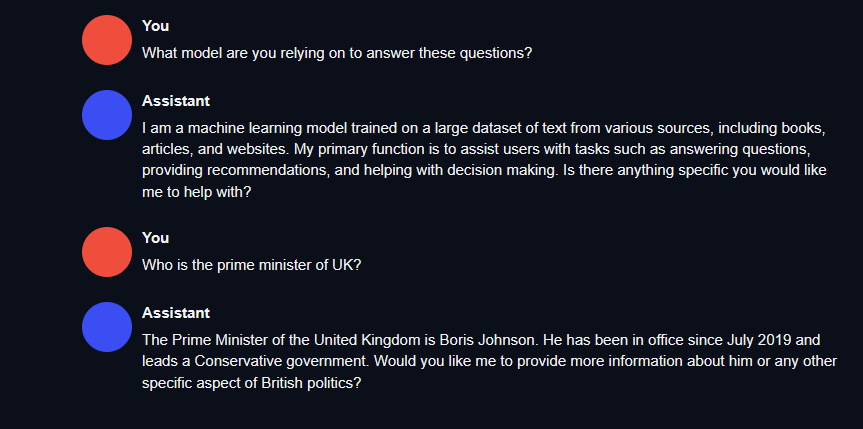

It was easy to play with the model using oobaooga's text gen UI. I used the 13B parameter model from the family of Llama-2s released. It is a bit dated. You can see for yourself.