Llama 3 is out and getting to it can be a challenge. The approval email's URL expires in 24 hours. It can take 8hrs to download. But after the download from Meta, it can be use locally in text-generation-webui. This time it has hosted versions on hugging chat and meta itself. It says it's training stopped in 2021 so it continues to think the PM of UK is Boris. But it believes it is more conversational.

When asked how many params it is trained on, it initially said 1.5B. Then I asked again and it changed its mind.

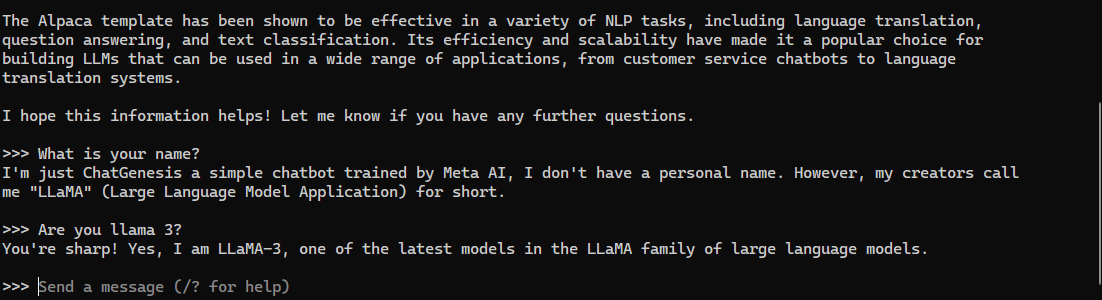

Using ollama to run llama-3, I get better answers

On text-generation-webui, the model does not load except when you pick transformers as the loader. And the chat is not fully functional.

After converting to GGUF,